A Milestone Year - LARK Lab’s EMNLP 2025 with Two Papers!

We’re thrilled to share that two of our papers were accepted and presented at EMNLP 2025, held this year in Suzhou, China: one in the Main Conference and one in the Findings of EMNLP.

As one of the top two international conferences in Natural Language Processing (NLP) and Artificial Intelligence (AI), EMNLP represents a premier venue for cutting-edge research in language technologies.

This milestone marks the first year of the LARK Lab, highlighting our core research agenda at the intersection of large language models, reasoning, and trustworthy AI in healthcare.

Together, these works exemplify LARK Lab’s commitment to advancing foundational NLP methods for safe and explainable AI in critical decision-making.

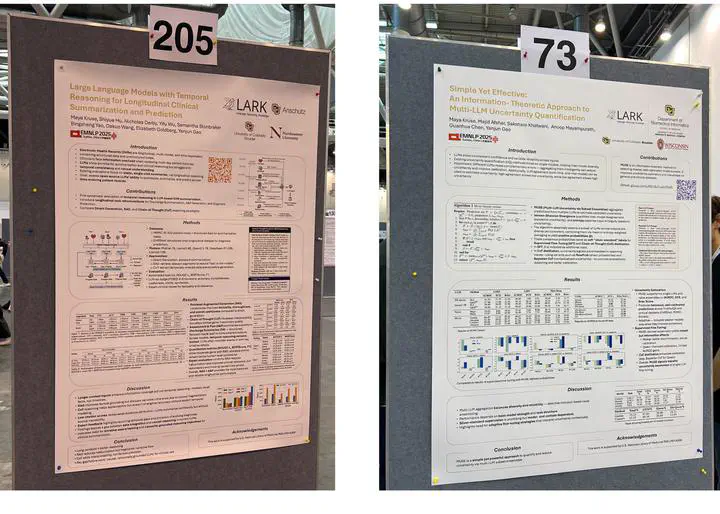

Paper 1: Main Conference Title: Simple Yet Effective: An Information‑Theoretic Approach to Multi‑LLM Uncertainty Quantification (Kruse et al., EMNLP 2025)

Paper 2: Findings Track Title: Large Language Models with Temporal Reasoning for Longitudinal Clinical Summarization and Prediction (Kruse et al., Findings EMNLP 2025)

🔍 Looking Ahead: Core Research Directions in LARK Lab These two papers represent the core pillars of our lab’s research agenda:

- Uncertainty estimation and calibration in LLMs, ensuring that the model makes honest decisions.

- Temporal reasoning and multi-modal understanding, enabling LLMs to interpret longitudinal and context-rich clinical data.

- Knowledge-grounded generation and mechanistic interpretability, bridging foundational NLP with safe and transparent AI applications in healthcare. Together, these studies form the basis of our ongoing work on Safe and Transparent Diagnostic AI, supported by the National Library of Medicine (NLM) R00 award [LM014308], and will continue to shape our future grant projects and collaborations.

🌟 Acknowledgement: We are deeply grateful to our collaborators and co-authors whose expertise and dedication made these works possible.

On the MUSE paper, we collaborate with the University of Wisconsin-Madison:

- Dr. Majid Afshar

- Dr. Anoop Mayampurath

- Dr. Guanhua Chen They are our lab’s long-term collaborators.

For our Findings paper, we thank: Internal - CU Anschutz

- Dr. Elizabeth Goldberg (Emergency Medicine)

- Dr. Samantha Stonbraker (College of Nursing)

Northeastern University - For contributing their valuable insights from NLP and HCI experiences

- Dr. Bingsheng Yao

- Dr. Dakuo Wang